junk-busters

This project is solving the SpaceBot Stereo Vision challenge. Description

Welcome,

we are

The Problem: Space Junk

The increasing amount of space junk [1] is a human made problem. We came up with the idea to use the NASA SPHERES platform [2] for developing autonomous satellites with stereo vision cameras to actively remove space junk.

Figure 1 Illustration of Space Junk by David Shikomba

Our Solution: Paired SPHERES

Our vision consists of two or more paired SPHERES: An observatory unit and one or more operational units. They can detect space debris and remove it in a safely manner, for example by using an ion thruster or by attaching a chemical thruster to old satellites.

Figure 2 SPHERES with VERTIGO extension for stereo vision [3]

Utilizing Stereo Vision

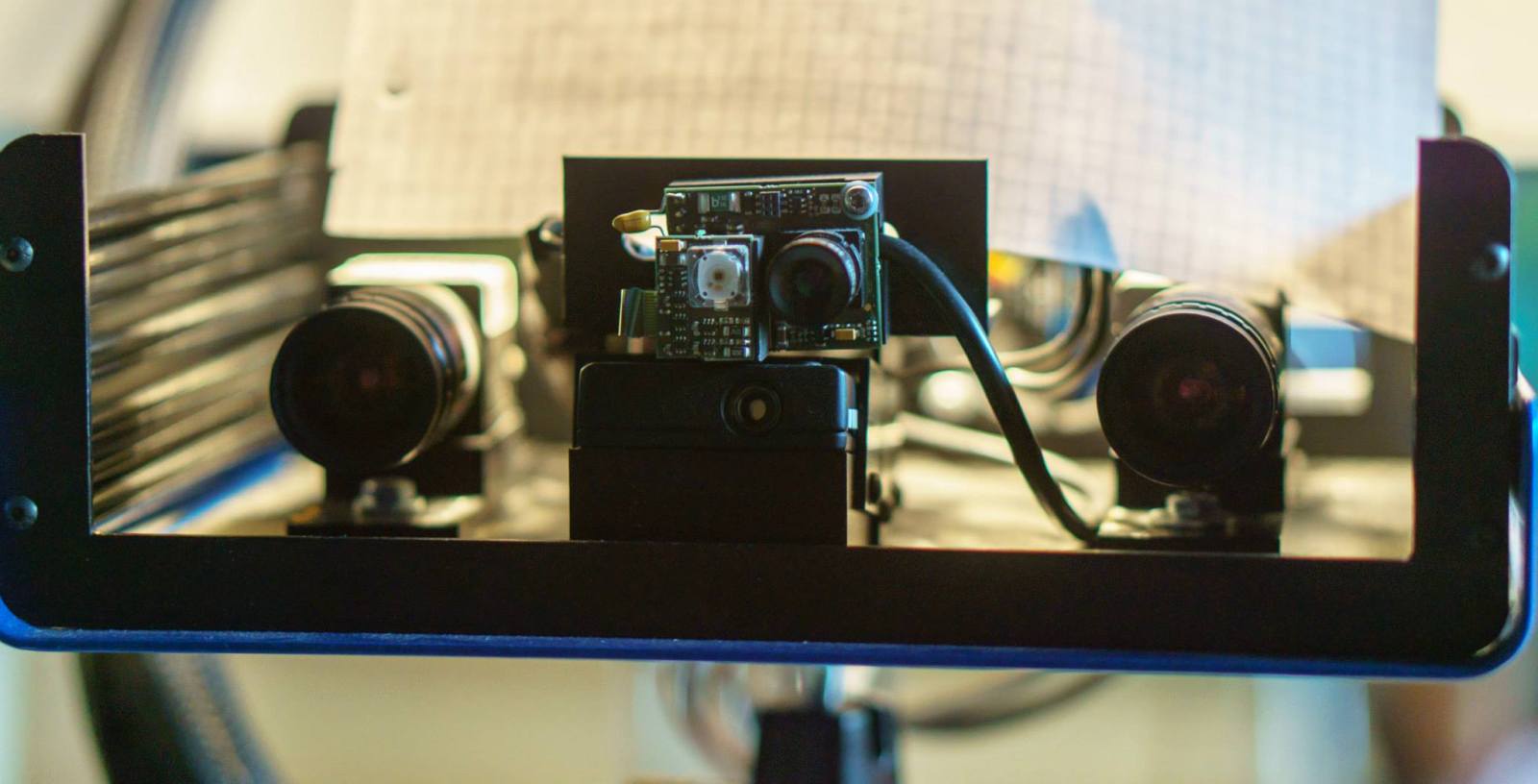

Having our idea in mind we used a real stereo camera setup (IDS uEye, see Figure 4), our laptop web-cams and virtual demos to develop our implementation.

Figure 4 Front view of our stereo sensor

Figure 4 Front view of our stereo sensor

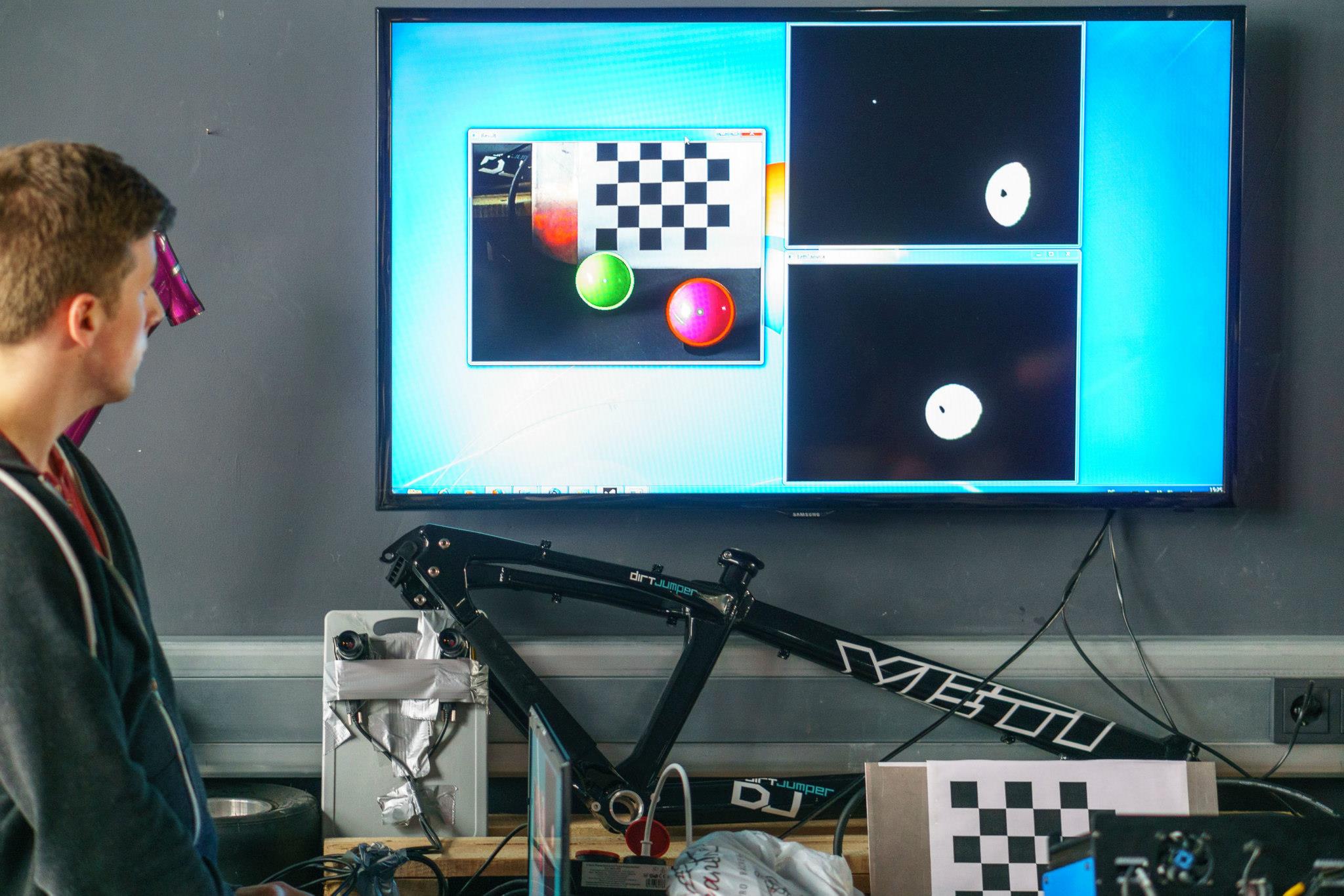

Figure 5 shows our live demo setup with some calibration targets. The magenta ball simulates some piece of space junk and the green ball is an approaching junk buster unit. The whole scene is seen by our stereo sensor which is used to reconstruct the 3D scene.

Figure 5 Demo Setup of our Camera Environment

Technical Implementation

We created a set of software to demonstrate our vision and concept. There are two main parts: Image processing / 3D reconstruction and the data interpretation plus visualization.

OpenCV Image Processing and 3D reconstruction

We used the OpenCV framework to solve the following task of processing stereo vision images [4] in real time:

- o Stereo Image Acquisition

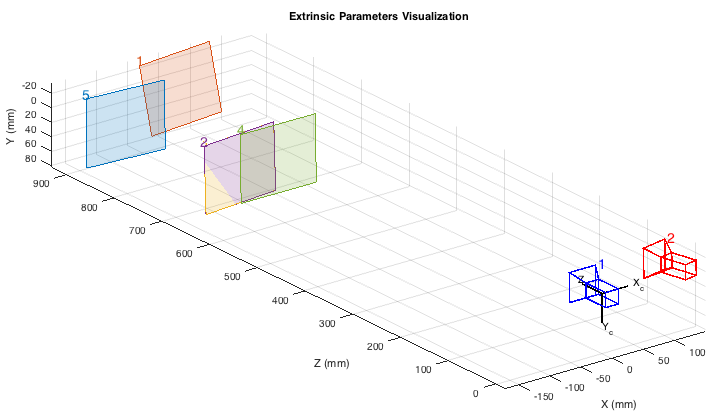

- o Camera Calibration using a checkerboard calibration target

- o Color Segmentation and Binarization

- o Object Tracking: Colored balls and taught patterns

Figure 6 Stereo Camera Calibration

Figure 7 Live Object Tracking

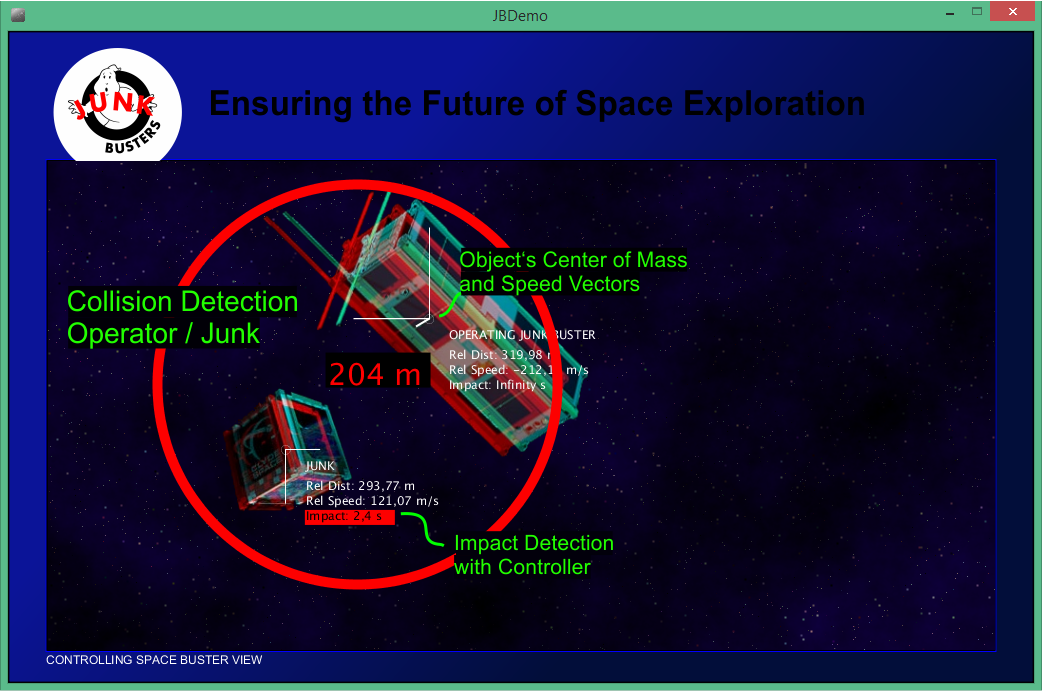

CONCEPT DEMO: Data Processing Data and Visualization

In order to demonstrate our vision of the Junk Buster SpaceBots we created an interactive live demo with exemplary space trajectories. Features:

- o Data Processing: Numeric calculation of distance, speed and acceleration

- o Visualization: Movements of the objects in 3D

- o Alarm features: Collision alarm between all objects

- o Impact prediction: Time to impact with alarm levels

- o Interactivity: The operational Junk Buster follows the mouse. Scrolling edits the depth value set.

- o Exemplary Space Trajectories: Generating data to animate the demo

Figure 8 Dashboard User Interface with Annotations

Figure 8 Dashboard User Interface with Annotations

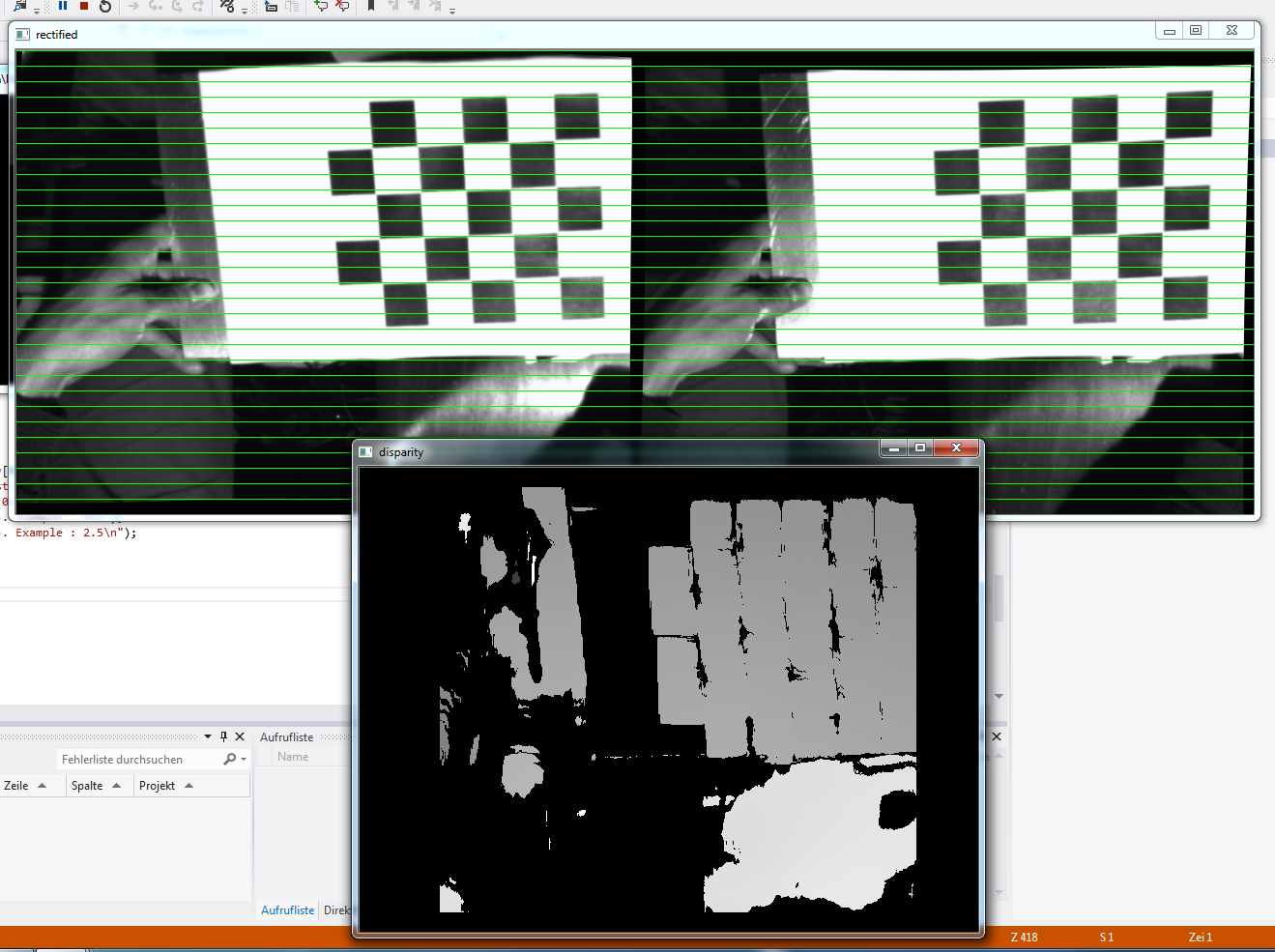

MATLAB Trials

The original plan was to code a proof of concept in MATLAB by using different toolboxes. But then reality kicked in and we experienced minor issues. So we had a NOGO decision for MATLAB and switched to coding in C++ using OpenCV. All the initial space trajectory processing was developed in MATLAB and afterwards ported to C and Java (Processing).

Figure 9 Results of Stereo Camera Calibration with MATLAB

Conclusion: Saving the Future of Space Exploration

By using an optical method of debris detection on board of the SpaceBots, they can track and remove objects which cannot be tracked with the needed accuracy by existing ground based tracking systems. We utilize the stereo data to deliver 3D information for approaching space debris. With the existing NASA SPHERE program there is an opportunity of testing our systems which than can be used for the greater purpose of removing space junk.

We think it's time for starting to save the future of space exploration now!

Acknowledgments

Thanks to the members of staff at Lab75, our host at Space Apps Challenge 2015 at Frankfurt. We also thank Prof. Dr. Stephan Neser for supporting us with the stereo camera equipment and MATLAB advice.

References

[1] "Orbital Debris FAQ: How many orbital debris are currently in Earth orbit?", NASA, March 2012.

[2] SPHERES Satellites, NASA, August 2013.

[3] The Visual Estimation for Relative Tracking and Inspection of Generic Objects (VERTIGO) program, MIT Space Systems Laboratory.

[4] Camera Calibration and 3D Reconstruction, OpenCV, February 2015.

Project Data

About the Authors

Eduardo Cruz

Software Engineer

Tim Elberfeld

Student of Photonics and Computer Vision

Marcel Kaufmann

Student of Photonics and Computer Vision

Sebastian Schleemilch

Student of Electronic and Medical Engineering

Philipp Schneider

Student of Photonics and Computer Vision

From left to right: Philipp, Tim, Astro-Jared, Marcel, Eduardo, Sebastian.

JUNK-BUSTERS Team with Astro-Jared at Lab75, Frankfurt on 12. April 2015.

This project was worked on during the NASA Space Apps Challenge 2015 (11.-12.04.2015).

Last updated: 19.04.2015.

Project Information

License: MIT license (MIT)

Source Code/Project URL: https://github.com/mace301089/NASASpaceAppsChallenge