starc-industries

This project is solving the SpaceGloVe: Spacecraft Gesture and Voice Commanding challenge. Description

It's time for a revolution

Gesture based computing is not a new technology. Current systems such as the Microsoft Kinect and Leap Motion technology have issues. For example, they must be calibrated, they are not portable and they are not practical, especially in the context of space-based computing. It's time for a revolution.

At Starc Industries, we have designed a gesture-based communication framework that addresses the above issues and have created an interface to gesture-based computing that is intuitive, unique and fun to use. Midas primary use is to extend a computer for a user who might have their hands full doing other tasks. In the new framework, they are able to operate the computer in an easy and hands-free manner.

What's the big picture?

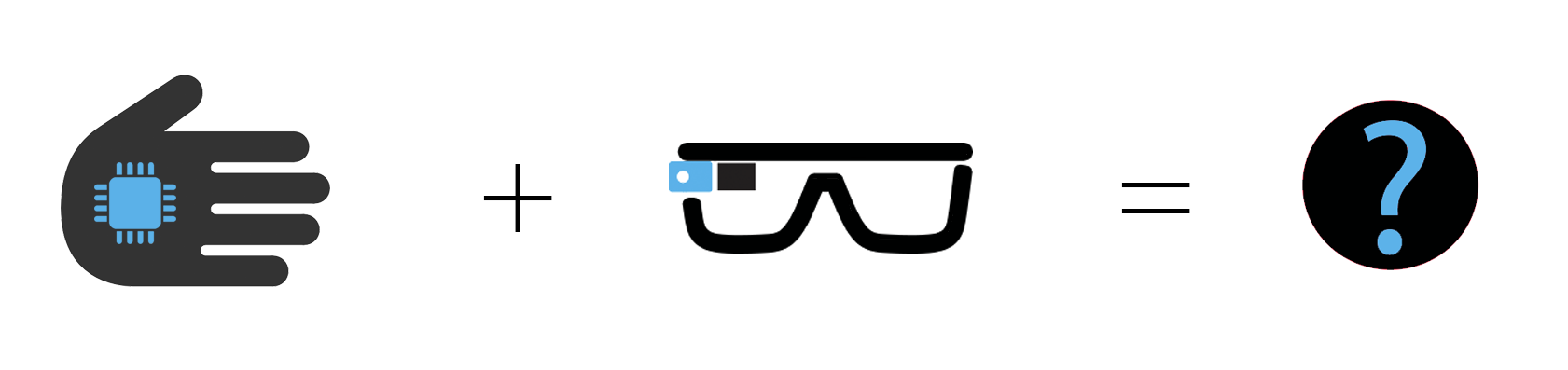

At the core of the system is a gyroscope mounted on the wrist, measuring the rotational velocity of the hand. This data is converted to gesture information. As the wrist is turned, the program is able to recognise gestures. These gestures are transferred directly to a heads-up display (HUD), for navigating through the display. To control what information is viewed on the heads-up display, the astronaut will use voice commands. For example, "Show me my email". The email account will be then shown as one of many views on the HUD; through which the astronaut can navigate by performing swipe motions.

Alternatively, wireless sensors could be integrated to the network and the data from these sensors could be streamed to the PC to be pushed out to the HUD over the network. Again, the astronaut would be able to instruct the network to send this data to the HUD via voice command. As an example of how such secondary data would fit into the system, we have collaborated with Binaryticos in Costa Rica. They have developed an app to provide information on an astronaut's vital statistics, such as hydration and blood oxygen levels. We would be able to use the information from their health sensors, push it over our network, and display a user's vital statistics on their HUD. We're grateful to Binaryticos for providing this information from their "Down to Earth" project.

Where are we now?

The system is currently in a prototyping phase. Kinematic information is gathered from a smartphone attached to the wrist. The data is broadcast over a UDP websocket to a computer where the information is analysed. This network uses the same infrastructure as the final implementation will use. By monitoring the rotational velocity of the hand, the computer is able to detect swiping gestures. These are then used to control a customised website to switch between different pages. The webpage simulates a space station crew member switching between different views on an HUD. This was necessary due to a lack of hardware. In the final system, the gesture data will be sent over the network directly to a HUD, rather than to a computer. We are also able to translate natural speech into computer commands, ready to send over the network to a main PC, where the next step would be to return data to the HUD.

What else needs to be done?

The next design iterations of Midas will involve replacing the smart phone with an inertial measurement unit and a microcontroller; built into a device such as a bracelet, clip-on unit or smart watch-type design. This is expected to significantly reduce the physical footprint of the device, making it smaller and more portable. Additionally, it will ensure that it is ergonomical, comfortable and non-intrusive. Furthermore, a custom software package will be built for the PC, to receive the voice commands from the existing network and broadcast back the appropriate information to the heads-up display.

Intuitive. Seamless. Versatile.

Midas aims to streamline and revolutionise the process of gesture-based control, not through a new core technology but through a superior interface. We aim to redefine the user-experience of gesture-based control and make it an intuitive, simple, low-cost and enjoyable user-experience.

Although Midas has been designed for use on a space station, in reality its potential extends far beyond this single application. It could be useful for a surgeon performing open-heart surgery, a bomb-disposal team, a Formula 1 pit crew or people with disabilities, or anyone else who needs an easy way to navigate through a computer. In summary, we have created an interface through which people can access a computer in an intuitive, hands-free manner.

Project Information

License: GNU General Public License version 3.0 (GPL-3.0)

Source Code/Project URL: https://github.com/zncolaco/starcindustries

Resources

Sensorstream IMU+GPS (Android Wireless IMU) - http://sourceforge.net/projects/smartphone-imu/

Voice Recognition API (Wit) - https://wit.ai/zncolaco/spaceglove